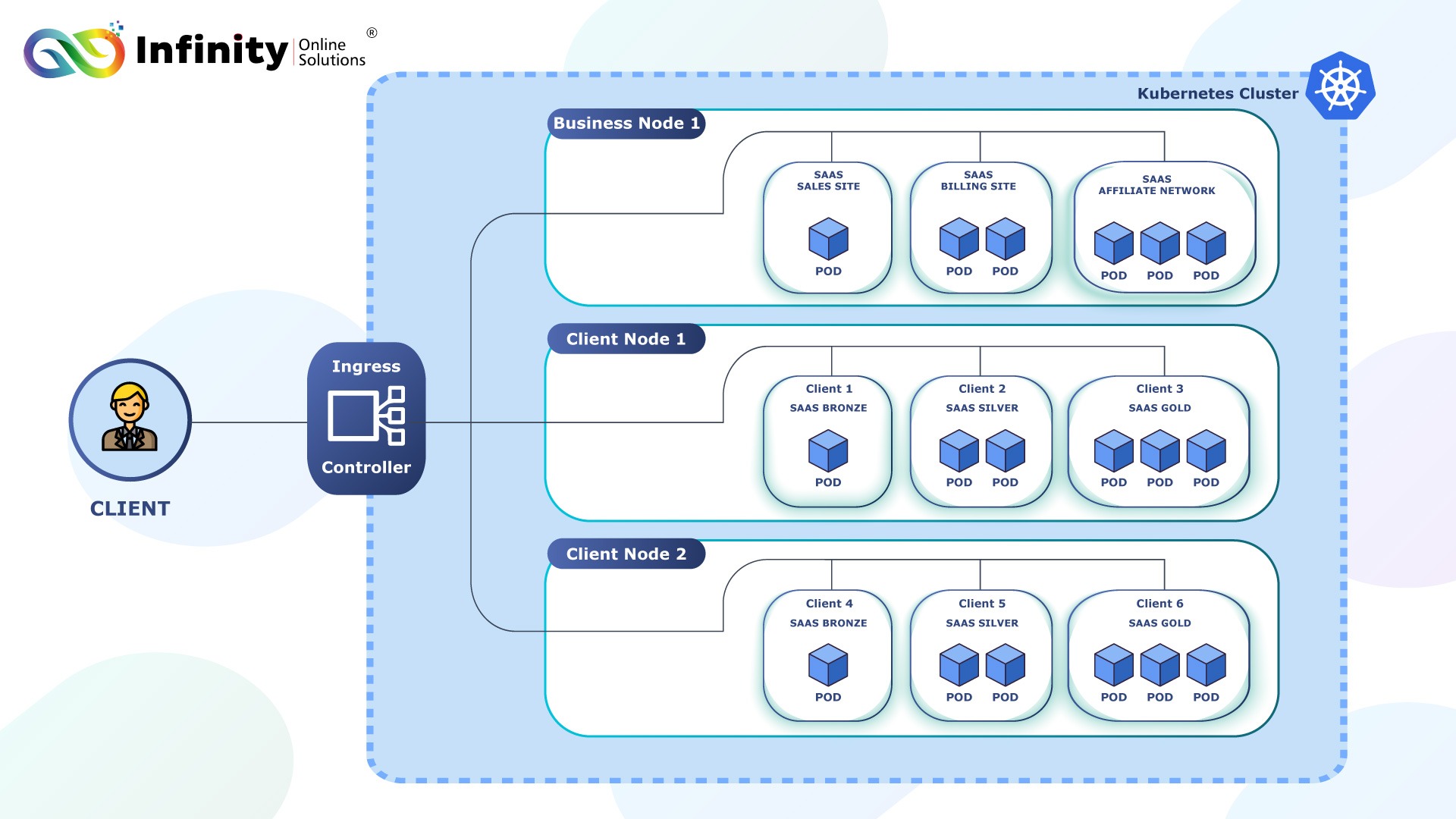

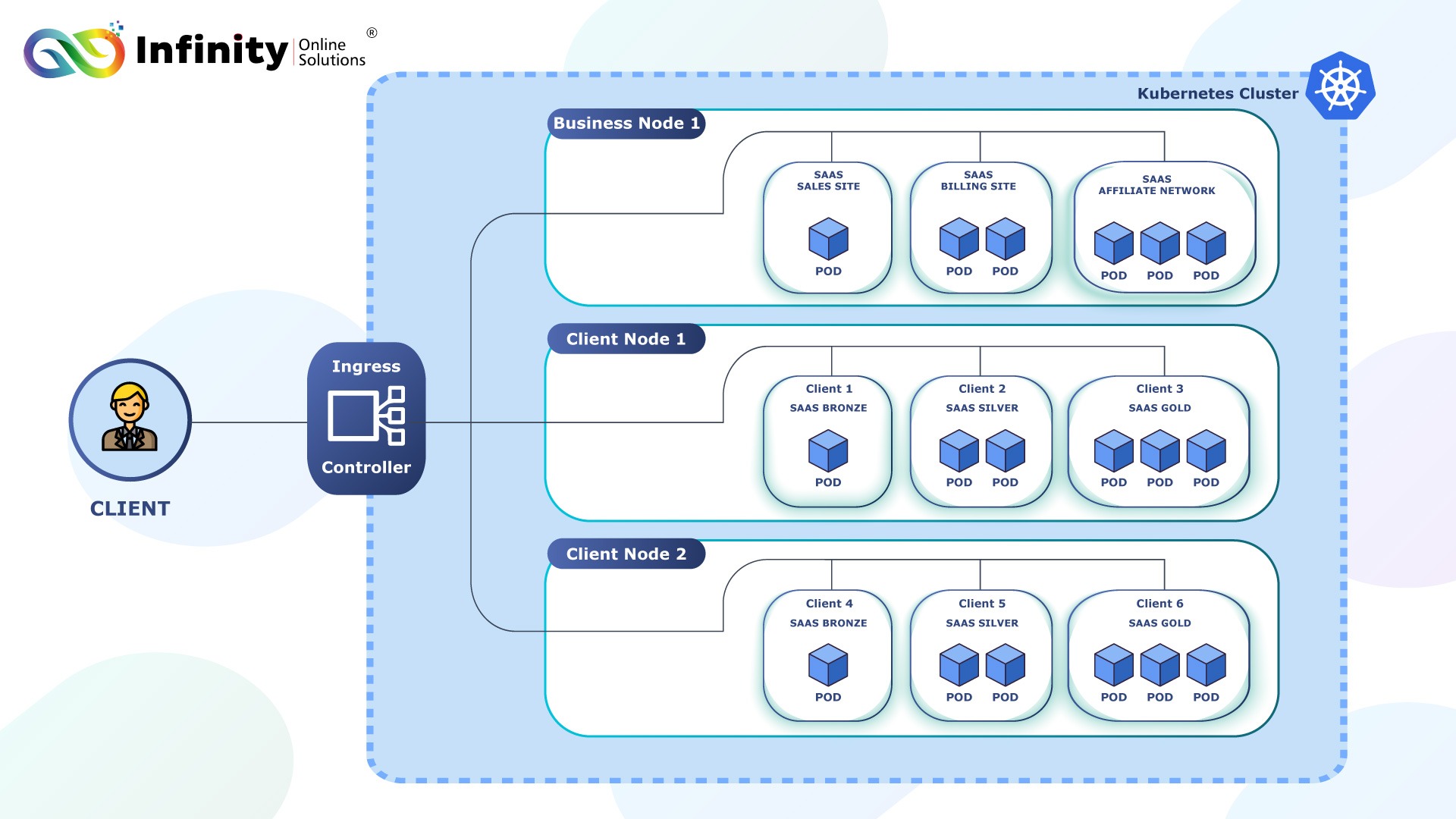

Are you a CTO, DevOps engineer, or IT manager looking to launch and scale your SaaS applications efficiently and securely? Whether you’re deploying Self-Managed or Managed Kubernetes offers the flexibility and power to optimize infrastructure, reduce costs, and ensure high performance at scale. In this comprehensive guide, we explore how self-managed or managed Kubernetes can help you launch your infrastructure and scale your business while maintaining security, reliability, and cost-efficiency.

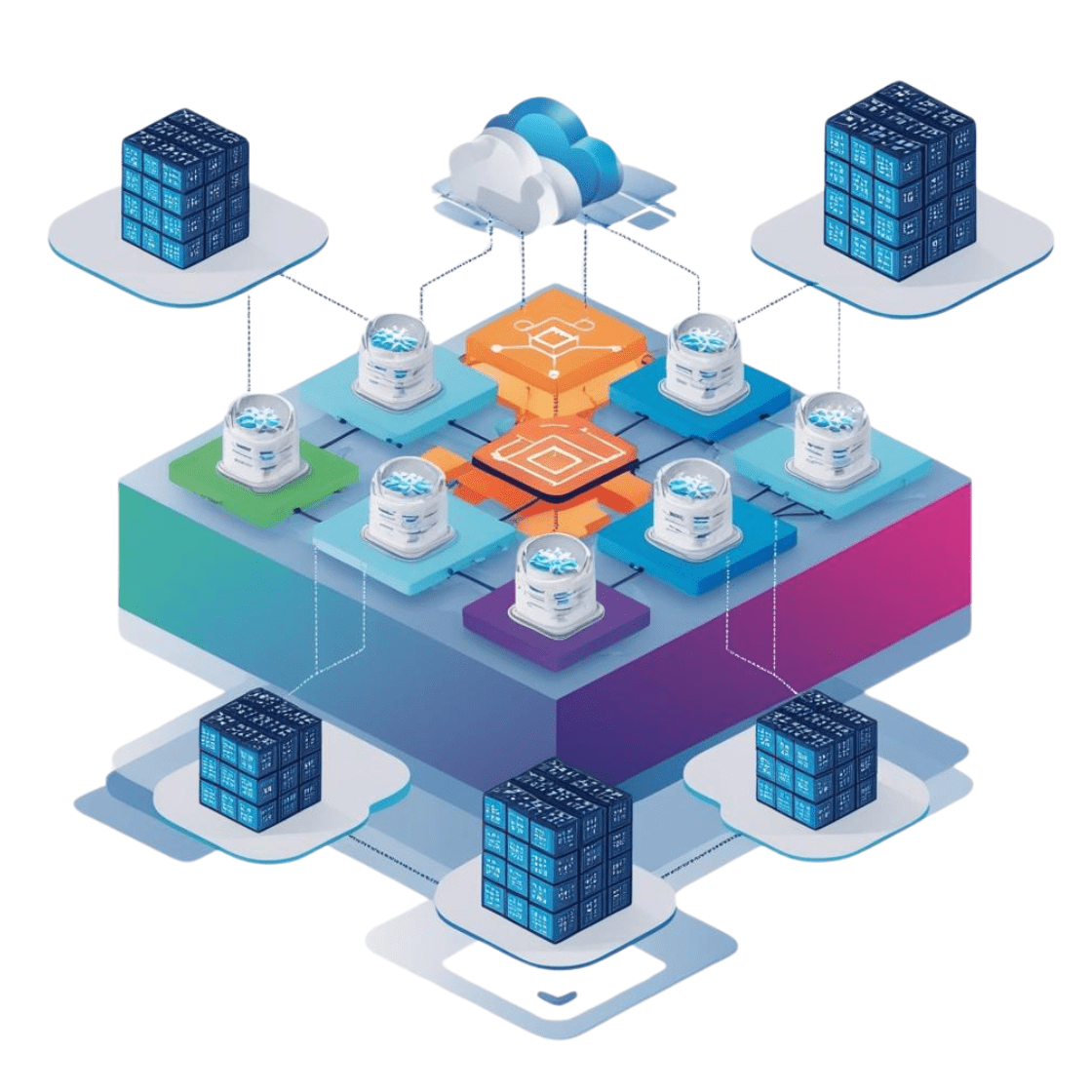

Discover key insights into multi-tenant SaaS architectures, resource optimization, auto-scaling, and how to implement Kubernetes across different environments to ensure smooth growth and deployment. Whether you are just starting or looking to scale, this guide provides actionable steps to enhance your Kubernetes deployment strategy.

1. 📐 Design Your Kubernetes (K8s) Architecture

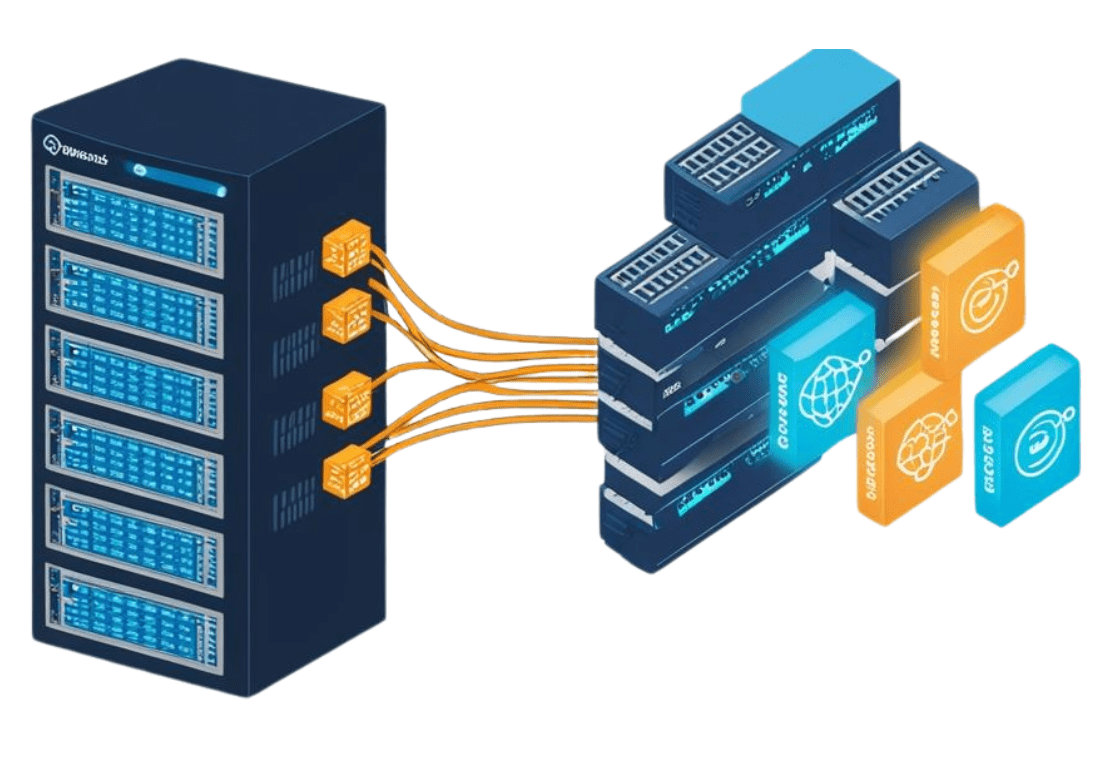

Self-Managed : Design Your Kubernetes (K8s) Architecture

Deploy Kubernetes clusters on VPS, dedicated servers, or bare-metal infrastructure. This approach offers cost efficiency and complete control over the infrastructure.

Use Self-Managed When:

- Regulatory Requirements: Industries like healthcare, finance, or government may require data to stay self-managed due to compliance or data residency laws.

- Customization Needs: If you require granular control over hardware and software configurations.

- Cost Control: For large-scale deployments where the long-term cost of maintaining your own infrastructure is cheaper than cloud services.

- Low-Latency Requirements: When operating in regions with unreliable internet connectivity or for applications requiring extremely low latency.

Benefits of On-Prem:

- Full control over infrastructure and data.

- Potential cost savings for large-scale or consistent workloads.

- Avoid vendor lock-in with cloud providers.

- Customizable to meet specific hardware or network requirements.

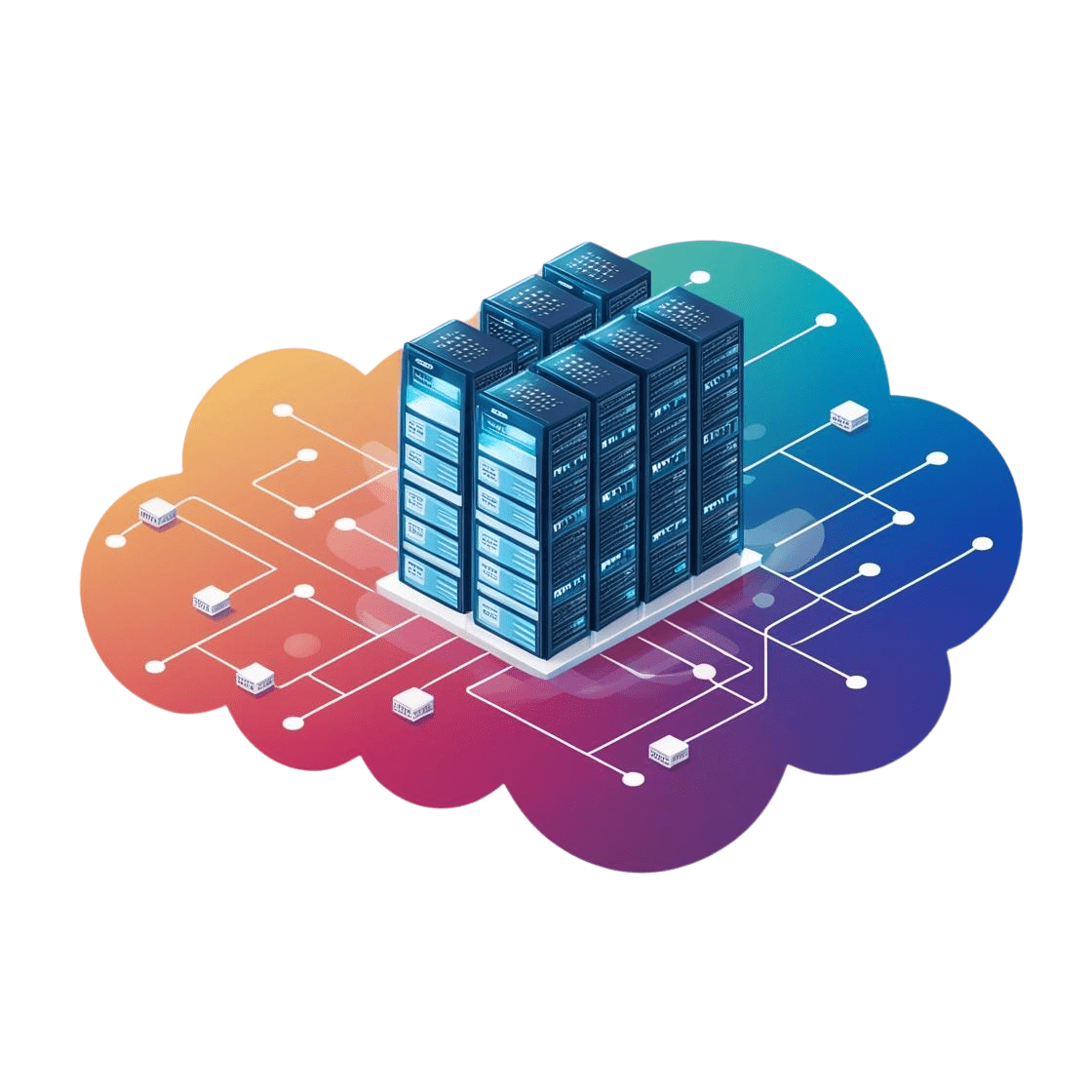

☁️ Managed Kubernetes : Design Your Kubernetes (K8s) Architecture

Use managed Kubernetes services like DOKS (DigitalOcean Kubernetes Service), EKS (Amazon Elastic Kubernetes Service), Azure Kubernetes Service, or GKE (Google Kubernetes Engine) for scalability and ease of management.

Use Cloud When:

- Scalability is Key: Cloud excels at handling dynamic workloads, allowing you to scale resources up or down based on demand.

- Fast Deployment: Cloud services let you spin up clusters quickly without worrying about hardware procurement or setup.

- Global Reach: Ideal for SaaS businesses targeting users in multiple geographic locations, leveraging cloud regions for low-latency access.

- Resource Constraints: If your team lacks the expertise or bandwidth to manage physical infrastructure.

- High Availability: Cloud providers offer built-in high availability and disaster recovery features.

Benefits of Cloud:

- Reduced operational overhead with managed Kubernetes services.

- Faster time-to-market for SaaS products.

- Global infrastructure for improved latency and user experience.

- Automatic upgrades and security patches by cloud providers.

- Pay-as-you-go pricing for better cost management during initial stages.

- Scalability, fault tolerance, and multi-tenancy

- Optimized networking, storage, and load balancing for SaaS needs

Table of Contents

1. 📐 Design Your Kubernetes (K8s) Architecture

2. 🐳 Containerize Your SaaS Application

3. 🏗️ Deploy Kubernetes Clusters

4. 🔗 Setup Container Network Interface (CNI)

5. 💾 Configure Persistent Storage

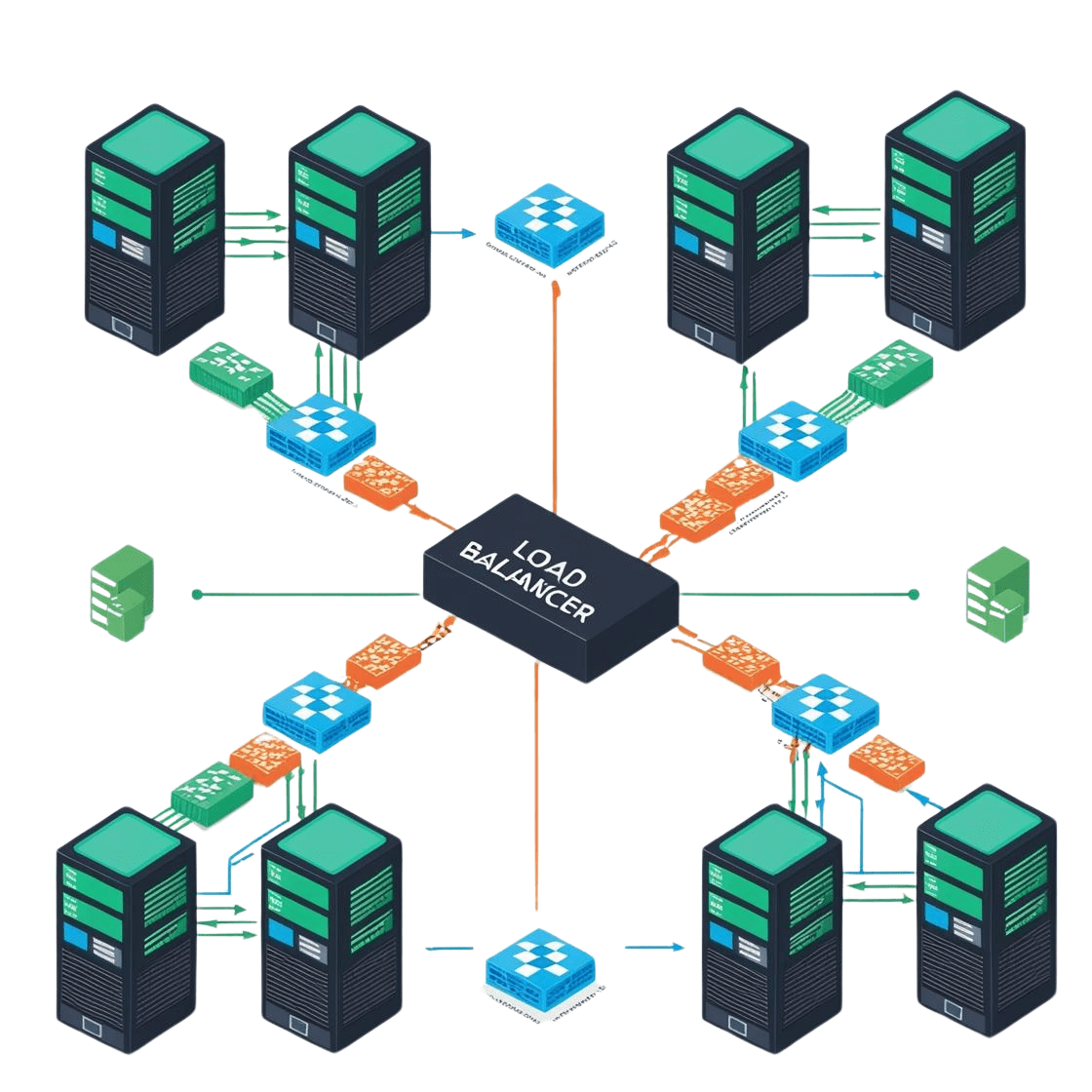

6. ⚖️ Set Up Load Balancing

7. 🌐 Manage Traffic Routing

8. 🔒 Automate Certificates and Secrets

9. ⛵ Simplify Deployment with Helm

10. 📊 Monitoring, Maintenance and Management

11. 📈 Test Scalability and Reliability

12. 🔄 Plan for Continuous Deployment

13. 🖥️ Automate Helm Chart Deployments with Shell Scripts

14. 🐍 Invoke Shell Scripts Programmatically

15. 🔥 Automate Deployment via Your SaaS Billing Solution

2. 🐳 Containerize Your Application for SaaS

🖥️ Self-Managed and ☁️ Managed Environments : Containerize Your Application for SaaS

Package your SaaS application in a portable format using Docker. Here’s an example Dockerfile for your reference:

FROM node:18

# Set working directory

WORKDIR /app

# Copy package files and install dependencies

COPY package*.json ./

RUN npm install

# Copy the application source code

COPY . .

# Expose the application port

EXPOSE 3000

# Start the application

CMD ["npm", "start"]

Build the Docker Image:

Run the following command to create a container image:

Test the Image Locally:

Start the container locally to ensure it works as expected:

Push the Image to a Container Registry:

Upload your image to a public or private container registry like Docker Hub, AWS ECR, or Google Container Registry:

docker push your-dockerhub-username/your-app-name:latest

Deploy Globally:

Your Docker image is now ready to be deployed on Kubernetes or any other Docker-compatible platform, making it accessible worldwide.

3. 🏗️ Deploy Kubernetes Clusters

🖥️ Self-Managed : Deploy Kubernetes Clusters

Self-Managed Kubernetes setups provide full control and customizability, ideal for regulated industries or low-latency requirements. Docker applications are portable, allowing deployment self-managed Kubernetes clusters effortlessly.

Steps and Code Example:

1. Install Kubernetes with kubeadm:

sudo apt-get update && sudo apt-get install -y apt-transport-https curl

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee -a /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

2. Initialize the Kubernetes Control Plane:

3. Set Up Networking with Cilium

curl -L --remote-name https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz

tar xzvf cilium-linux-amd64.tar.gz

sudo mv cilium /usr/local/bin/

# Deploy Cilium

cilium install

ork-cidr=192.168.0.0/16

4. Secure Communication with WireGuard:

sudo apt install -y wireguard

# Configure WireGuard on each node

sudo wg genkey | tee privatekey | wg pubkey > publickey

# Use privatekey and publickey to configure WireGuard peers in /etc/wireguard/wg0.conf

☁️ Managed Kubernetes : Deploy Kubernetes Clusters

Cloud setups offer scalability, ease of management, and rapid deployment, perfect for businesses looking to focus on applications rather than infrastructure. Docker applications are portable, allowing deployment on cloud-based Kubernetes clusters effortlessly.

Steps and Code Example:

1. Deploy on DigitalOcean Kubernetes (DOKS):

snap install doctl

# Authenticate with DigitalOcean

doctl auth init

# Create a Kubernetes cluster

doctl kubernetes cluster create my-cluster --region nyc1 --node-pool

"name=default;size=s-2vcpu-4gb;count=3"

2. Deploy on Amazon EKS:

curl --silent --location "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /usr/local/bin

# Create an EKS cluster

eksctl create cluster --name my-cluster --region us-west-2 --nodes 3

3. Deploy on Google Kubernetes Engine (GKE):

curl https://sdk.cloud.google.com | bash

exec -l $SHELL

gcloud init

# Enable Kubernetes Engine API

gcloud services enable container.googleapis.com

# Create a GKE cluster

gcloud container clusters create my-cluster --num-nodes 3 --zone us-central1-a

4. Deploy on Azure Kubernetes Service (AKS):

curl -sL https://aka.ms/InstallAzureCLIDeb | sudo bash

# Create an AKS cluster

az group create --name myResourceGroup --location eastus

az aks create --resource-group myResourceGroup --name myAKSCluster --node-count 3 --enable-addons monitoring --generate-ssh-keys

az aks get-credentials --resource-group myResourceGroup --name myAKSCluster

4. 🔗 Setup Container Network Interface (CNI)

🖥️ Self-Managed : Setup Container Network Interface (CNI)

- Set up Kubernetes on VPS, bare-metal servers, or dedicated machines.

- Use Cilium for advanced networking and WireGuard for secure communication between nodes.

Steps and Code Example:

1. Install Cilium CLI:

curl -L --remote-name https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz

tar xzvf cilium-linux-amd64.tar.gz

sudo mv cilium /usr/local/bin/

2. Deploy Cilium with WireGuard Support:

cilium install --encryption wireguard

This will deploy Cilium as the CNI (Container Network Interface) and enable WireGuard for encrypted node-to-node communication. Cilium automatically manages the WireGuard configurations.

3. Verify Cilium and WireGuard Deployment

cilium status

# Confirm that WireGuard is enabled

cilium status | grep Encryption

4. Join Worker Nodes

--token

--discovery-token-ca-cert-hash sha256:

Please Refer Point no 4 – Setup Container Network Interface (CNI)

☁️ Managed Kubernetes : Setup Container Network Interface (CNI)

- Deploy clusters using DOKS, EKS, Azure, or GKE.

- Leverage cloud-native VPC networking for secure intra-cluster communication.

5. 💾 Configure Persistent Storage

🖥️ Self-Managed : Use Rook-Ceph for Scalable Storage

Rook-Ceph provides a scalable and highly available storage solution for on-prem Kubernetes clusters.

Steps and Code Example:

1. Create a StorageClass:

kind: StorageClass

metadata:

name: rook-ceph-block

provisioner: rook-ceph.rbd.csi.ceph.com

parameters:

clusterID: rook-ceph

pool: replicapool

imageFeatures: layering

reclaimPolicy: Delete

allowVolumeExpansion: true

2. Use the StorageClass in a PVC: Create a PersistentVolumeClaim (PVC) for your application.

kind: PersistentVolumeClaim

metadata:

name: ceph-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: rook-ceph-block

☁️ Managed Kubernetes : Use Provider-Specific Storage Solutions

1. AWS EBS (Persistent Storage):

1. Create a StorageClass for EBS:

kind: StorageClass

metadata:

name: ebs-sc

provisioner: kubernetes.io/aws-ebs

parameters:

type: gp2

fsType: ext4

reclaimPolicy: Delete

2. Create a PVC Using EBS:

kind: PersistentVolumeClaim

metadata:

name: ebs-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: ebs-sc

2. Azure Disk (Persistent Storage):

1. Create a StorageClass for Azure Disk:

kind: StorageClass

metadata:

name: azure-disk-sc

provisioner: kubernetes.io/azure-disk

parameters:

storageaccounttype: Premium_LRS

reclaimPolicy: Delete

allowVolumeExpansion: true

2. Create a PVC Using Azure Disk:

kind: PersistentVolumeClaim

metadata:

name: azure-disk-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: azure-disk-sc

3. GCP Persistent Disk (Persistent Storage):

1.Create a StorageClass for GCP PD:

kind: StorageClass

metadata:

name: gcp-pd-sc

provisioner: kubernetes.io/gce-pd

parameters:

type: pd-ssd reclaimPolicy: Delete

allowVolumeExpansion: true

Apply the StorageClass:

2.Create a PVC Using GCP Persistent Disk:

kind: PersistentVolumeClaim

metadata:

name: gcp-pd-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: gcp-pd-sc

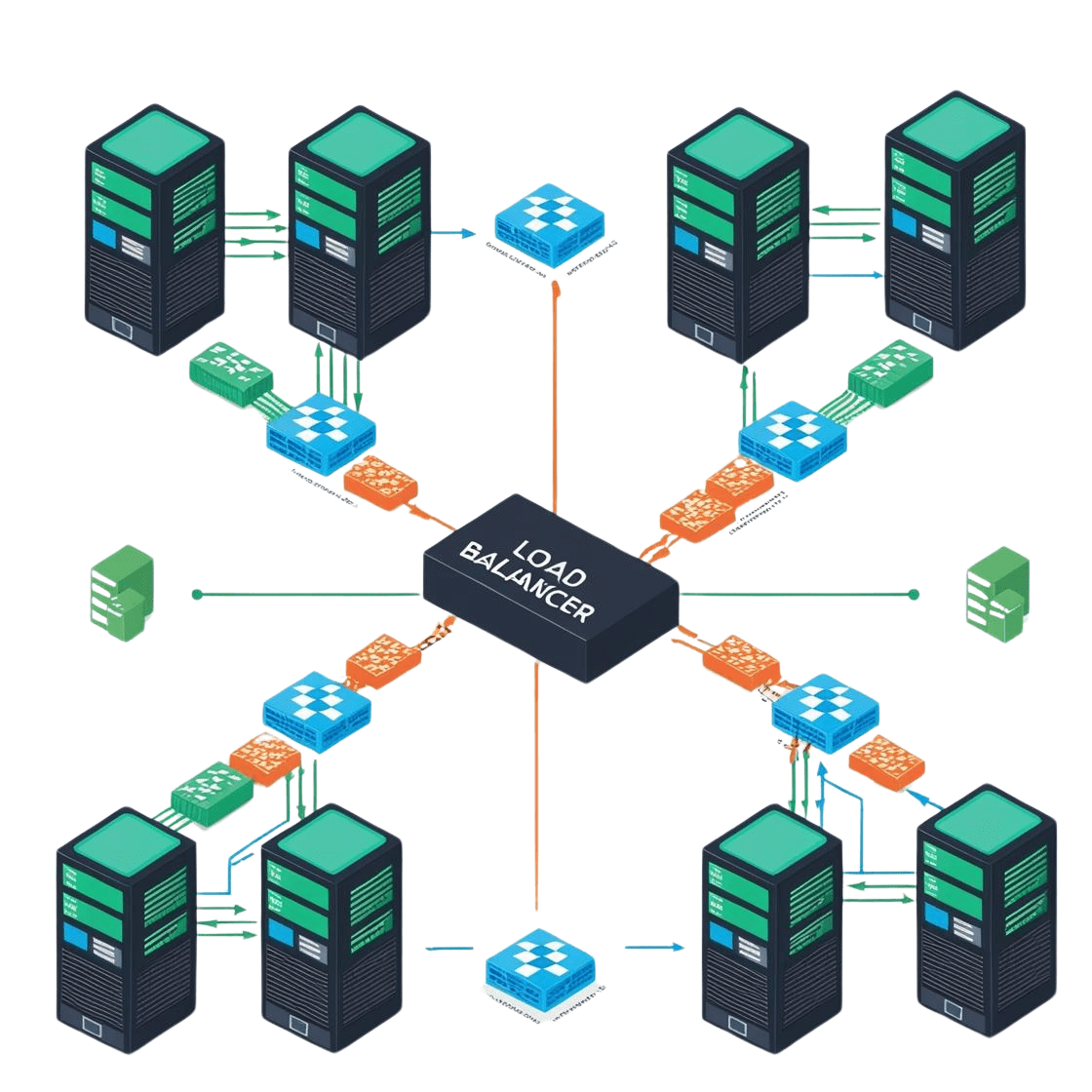

6. ⚖️ Set Up Load Balancing

Ensure reliable distribution of traffic across your Kubernetes nodes and services with tailored load-balancing solutions.

🖥️ Self-Managed Solutions : Set Up Load Balancing

Deploy MetalLB to enable load balancing in bare-metal Kubernetes environments.

On-Premises: Deploy MetalLB

1.Install MetalLB:

2.Configure Layer 2 Mode:

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default-pool

protocol: layer2

addresses:

- 192.168.1.100-192.168.1.200

Apply the ConfigMap:

3.Create a Service Using MetalLB:

kind: Service

metadata:

name: my-service

namespace: default

spec:

type: LoadBalancer

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

☁️ Managed Kubernetes Solutions : Set Up Load Balancing

Use cloud-native load balancer solutions provided by DOKS, EKS, Azure, or GKE.

1.Create a LoadBalancer Service:

kind: Service

metadata:

name: my-eks-service

annotations:

service.beta.kubernetes.io/aws-load-balancer-type: "nlb" # Use NLB

spec:

type: LoadBalancer

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

Apply the service:

2.Verify the Load Balancer:

Use the following command to retrieve the load balancer’s external IP or DNS:

Azure AKS Example:

1.Create a LoadBalancer Service:

kind: Service

metadata:

name: my-aks-service

annotations:

service.beta.kubernetes.io/azure-load-balancer-internal: "true" # Internal load balancer

spec:

type: LoadBalancer

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

Apply the service:

2.Verify the Load Balancer: Check the external IP:

GCP GKE Example:

1.Create a LoadBalancer Service:

kind: Service

metadata:

name: my-gke-service

spec:

type: LoadBalancer

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

2.Verify the Load Balancer: Retrieve the external IP:

7. 🌐 Manage Traffic Routing

Effectively route external traffic to your Kubernetes services using robust ingress solutions.

🖥️ Self-Managed Solutions: Manage Traffic Routing

Configure Nginx Ingress Controller for secure, scalable routing to Kubernetes services.

☁️ Managed Kubernetes Solutions: Manage Traffic Routing

Use cloud-specific ingress solutions like AWS ALB Ingress Controller (EKS) or GKE Ingress for seamless integration.

8. 🔒 Automate Certificates and Secrets

Ensure secure management of certificates and sensitive data in your Kubernetes cluster.

✔️ Use Cert Manager:

- Automate lets encrypt TLS/SSL certificate issuance and renewal.

🔑 Store Secrets Securely:

- Use Kubernetes secrets to encrypt sensitive data such as API keys and database credentials.

9. ⛵ Simplify Deployment with Helm

Helm is a powerful Kubernetes package manager that allows you to package and distribute your application as a Helm Chart, making deployment globally consistent and automated

Steps to Package with Helm:

1. Create a Helm Chart:

Scaffold a new Helm chart by running:

The directory includes:

- Chart.yaml: Metadata about your chart.

- values.yaml: Default application configurations.

- templates/: Kubernetes manifests rendered using

values.yaml.

2. Customize Templates:

Modify templates/deployment.yaml to use your Docker image:

containers:

- name: your-app-container

image: your-dockerhub-username/your-app-name:latest

ports:

- containerPort: 3000

3. Package the Helm Chart:

Create a .tgz package for sharing or deployment:

4. Host the Chart for Global Use:

Publish the Helm chart to a Helm repository (e.g., GitHub Pages or Artifact Hub).

5. Deploy the Application Globally:

Install the chart on Kubernetes:

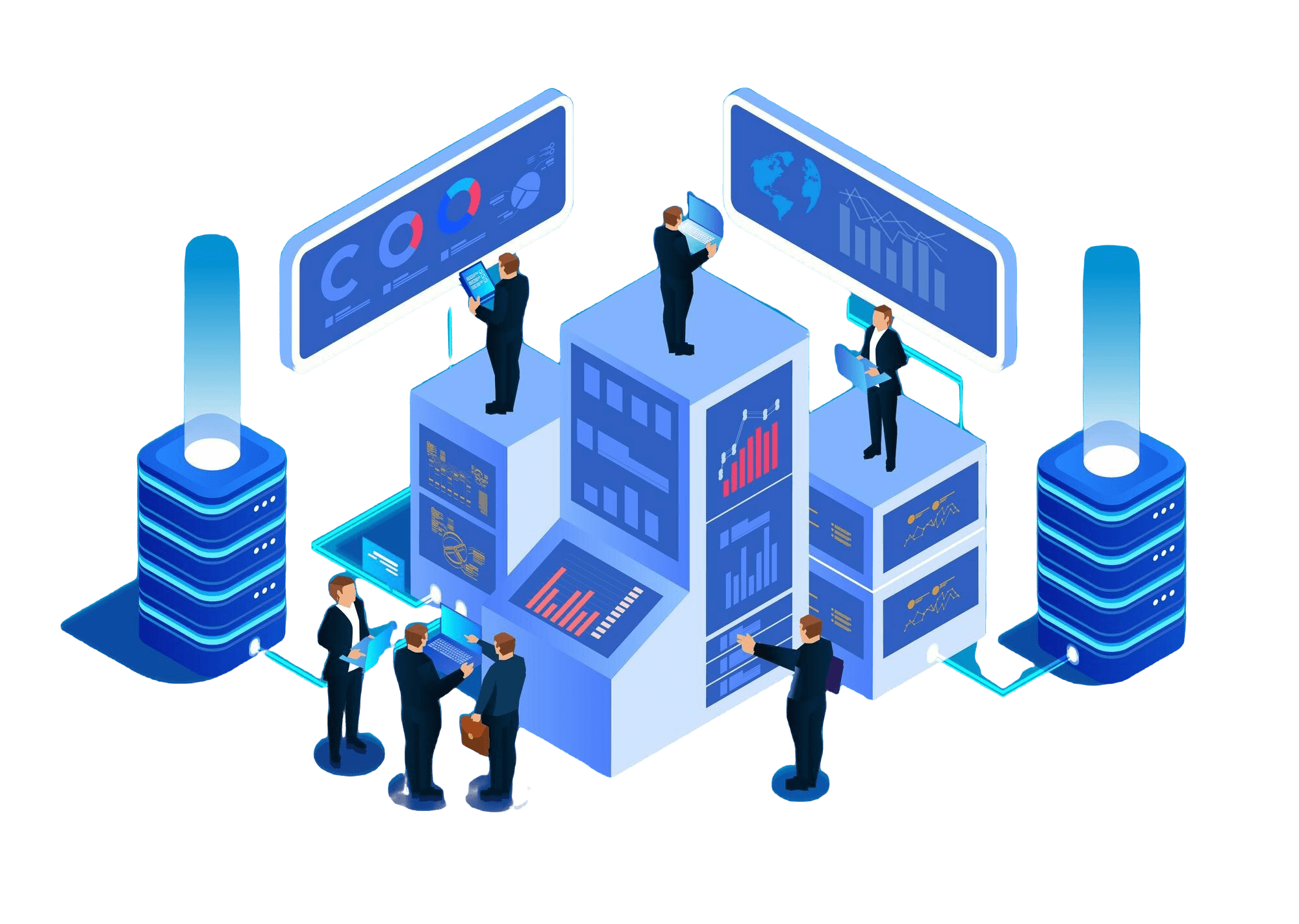

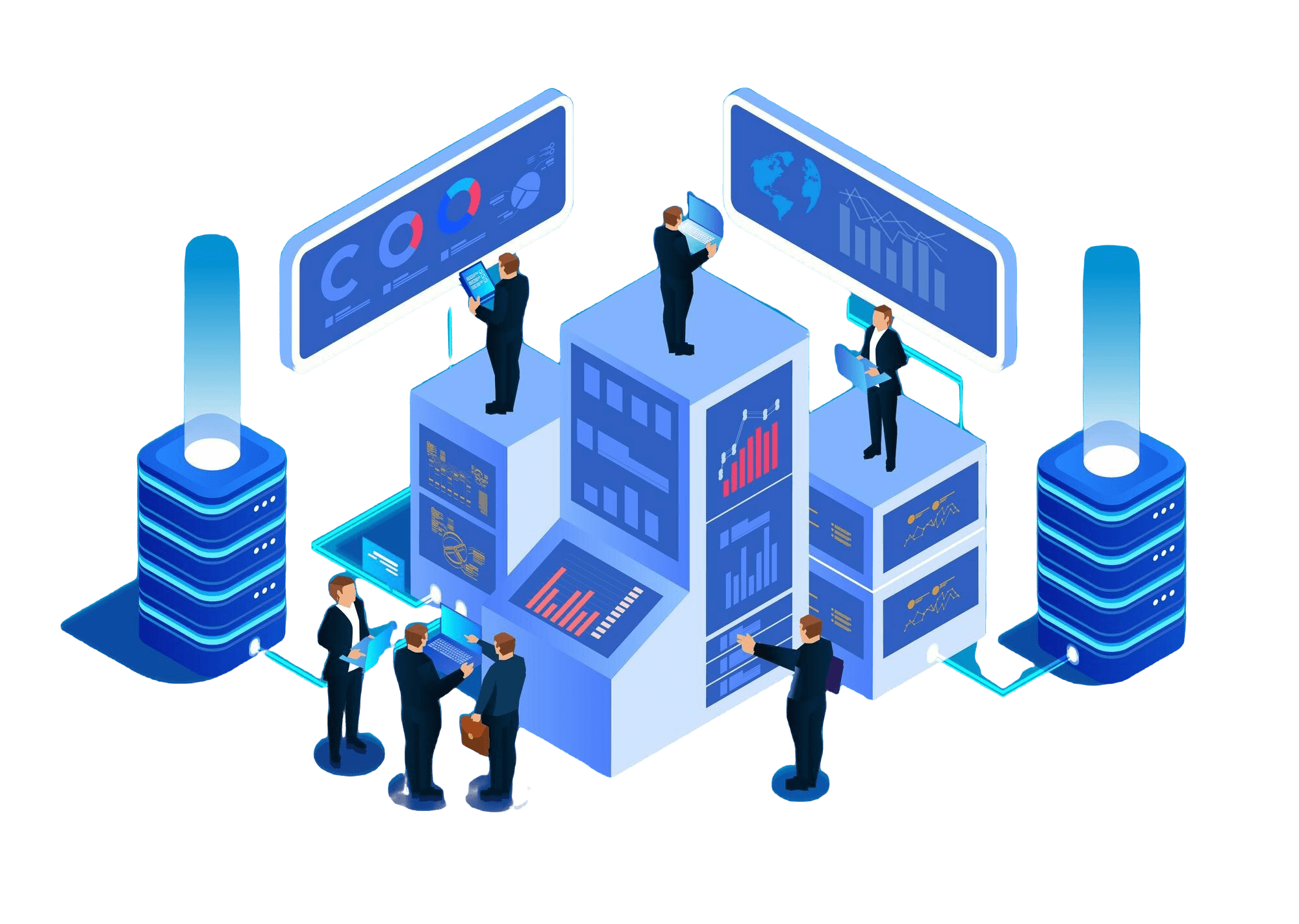

10. 📊 Monitoring, Maintenance and Management

Set up monitoring for your Kubernetes cluster to ensure performance and reliability using the following tools

🖥️ Self-Managed : Monitoring, Maintenance and Management

Kubernetes Dashboard: Real-time cluster monitoring via a graphical interface.

☁️ Managed : Monitoring, Maintenance and Management

Deploy the Kubernetes Dashboard for real-time cluster monitoring and efficient resource management.

Use cloud-native monitoring tools such as:

- AWS CloudWatch

- Azure Monitor

- GCP Operations Suite

Universal Monitoring Tools:

- Prometheus: Metrics collection and alerting.

- Grafana: Visualization and dashboards.

- Kube-state-metrics: Kubernetes-specific metrics.

- ELK Stack: Log monitoring (Elasticsearch, Logstash, Kibana).

- Loki: Lightweight log aggregation.

- Jaeger: Distributed tracing for microservices.

These tools provide robust monitoring and insights into your Kubernetes cluster.

11. 📈 Test Scalability and Reliability

- Perform load tests to ensure your SaaS application:

- Scales efficiently under increased demand.

- Meets redundancy and failover requirements for high availability.

12. 🔄 Plan for Continuous Deployment

Streamline your deployment process and improve reliability with these practices:

- Use CI/CD Pipelines:

Automate application deployments to minimize manual errors. - Adopt GitOps Tools:

Tools like Argo CD or Flux can manage Kubernetes configurations directly from your repository

13. 🖥️ Automate Helm Chart Deployments with Shell Scripts

Automate deployments to streamline processes and enhance reliability:

- Automate Helm chart installation, upgrades, and rollbacks.

- Manage namespaces, configurations, and persistent storage setups

Example Script:

echo "Deploying Application Chart..."

helm upgrade --install my-app ./charts/my-app --namespace my-namespace -f values.yaml

echo "Deployment Completed!"

14. 🐍 Invoke Shell Scripts Programmatically

Automate deployments to streamline processes and enhance reliability:

- Automate Helm chart installation, upgrades, and rollbacks.

- Manage namespaces, configurations, and persistent storage setups

Example Script:

def deploy_application():

subprocess.run(["bash", "./deploy-app.sh"])

print("Deployment Triggered!")

deploy_application()

15. 🔥 Automate Deployment via Your SaaS Billing Solution

Use your SaaS billing solution to:

- Automatically provision Kubernetes resources for new customers.

- Trigger Helm deployments directly after a successful subscription.

Workflow Example:

- User subscribes → SaaS system provisions Kubernetes namespace → Deploys Helm chart using the automated script.

Are you a CTO, DevOps engineer, or IT manager looking to launch and scale your SaaS applications efficiently and securely? Whether you’re deploying on-prem or in the cloud, Kubernetes offers the flexibility and power to optimize infrastructure, reduce costs, and ensure high performance at scale. In this comprehensive guide, we explore how on-prem or in-cloud Kubernetes can help you launch your infrastructure and scale your business while maintaining security, reliability, and cost-efficiency.

Discover key insights into multi-tenant SaaS architectures, resource optimization, auto-scaling, and how to implement Kubernetes across different environments to ensure smooth growth and deployment. Whether you are just starting or looking to scale, this guide provides actionable steps to enhance your Kubernetes deployment strategy.

1. 📐 Design Your Kubernetes (K8s) Architecture

🖥️ On-Prem: Design Your Kubernetes (K8s) Architecture

Deploy Kubernetes clusters on VPS, dedicated servers, or bare-metal infrastructure. This approach offers cost efficiency and complete control over the infrastructure.

Use On-Prem When:

- Regulatory Requirements: Industries like healthcare, finance, or government may require data to stay on-prem due to compliance or data residency laws.

- Customization Needs: If you require granular control over hardware and software configurations.

- Cost Control: For large-scale deployments where the long-term cost of maintaining your own infrastructure is cheaper than cloud services.

- Low-Latency Requirements: When operating in regions with unreliable internet connectivity or for applications requiring extremely low latency.

Benefits of On-Prem:

- Full control over infrastructure and data.

- Potential cost savings for large-scale or consistent workloads.

- Avoid vendor lock-in with cloud providers.

- Customizable to meet specific hardware or network requirements.

☁️ Cloud: Design Your Kubernetes (K8s) Architecture

Use managed Kubernetes services like DOKS (DigitalOcean Kubernetes Service), EKS (Amazon Elastic Kubernetes Service), Azure Kubernetes Service, or GKE (Google Kubernetes Engine) for scalability and ease of management.

Use Cloud When:

- Scalability is Key: Cloud excels at handling dynamic workloads, allowing you to scale resources up or down based on demand.

- Fast Deployment: Cloud services let you spin up clusters quickly without worrying about hardware procurement or setup.

- Global Reach: Ideal for SaaS businesses targeting users in multiple geographic locations, leveraging cloud regions for low-latency access.

- Resource Constraints: If your team lacks the expertise or bandwidth to manage physical infrastructure.

- High Availability: Cloud providers offer built-in high availability and disaster recovery features.

Benefits of Cloud:

- Reduced operational overhead with managed Kubernetes services.

- Faster time-to-market for SaaS products.

- Global infrastructure for improved latency and user experience.

- Automatic upgrades and security patches by cloud providers.

- Pay-as-you-go pricing for better cost management during initial stages.

- Scalability, fault tolerance, and multi-tenancy

- Optimized networking, storage, and load balancing for SaaS needs

2. 🐳 Containerize Your SaaS Application

Package your SaaS application in a portable format using Docker. Here’s an example Dockerfile for your reference:

FROM node:18

# Set working directory

WORKDIR /app

# Copy package files and install dependencies

COPY package*.json ./

RUN npm install

# Copy the application source code

COPY . .

# Expose the application port

EXPOSE 3000

# Start the application

CMD ["npm", "start"]

Build the Docker Image:

Run the following command to create a container image:

Test the Image Locally:

Start the container locally to ensure it works as expected:

Push the Image to a Container Registry:

Upload your image to a public or private container registry like Docker Hub, AWS ECR, or Google Container Registry:

docker push your-dockerhub-username/your-app-name:latest

Deploy Globally:

Your Docker image is now ready to be deployed on Kubernetes or any other Docker-compatible platform, making it accessible worldwide.

3. 🏗️ Deploy Kubernetes Clusters

🖥️ On-Prem: Deploy Kubernetes Clusters

On-Prem setups provide full control and customizability, ideal for regulated industries or low-latency requirements. Docker applications are portable, allowing deployment on-prem Kubernetes clusters effortlessly.

Steps and Code Example:

1. Install Kubernetes with kubeadm:

sudo apt-get update && sudo apt-get install -y apt-transport-https curl

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee -a /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

2. Initialize the Kubernetes Control Plane:

3. Set Up Networking with Cilium

curl -L --remote-name https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz

tar xzvf cilium-linux-amd64.tar.gz

sudo mv cilium /usr/local/bin/

# Deploy Cilium

cilium install

ork-cidr=192.168.0.0/16

4. Secure Communication with WireGuard:

sudo apt install -y wireguard

# Configure WireGuard on each node

sudo wg genkey | tee privatekey | wg pubkey > publickey

# Use privatekey and publickey to configure WireGuard peers in /etc/wireguard/wg0.conf

☁️ Cloud: Deploy Kubernetes Clusters

Cloud setups offer scalability, ease of management, and rapid deployment, perfect for businesses looking to focus on applications rather than infrastructure. Docker applications are portable, allowing deployment on cloud-based Kubernetes clusters effortlessly.

Steps and Code Example:

1. Deploy on DigitalOcean Kubernetes (DOKS):

snap install doctl

# Authenticate with DigitalOcean

doctl auth init

# Create a Kubernetes cluster

doctl kubernetes cluster create my-cluster --region nyc1 --node-pool

"name=default;size=s-2vcpu-4gb;count=3"

2. Deploy on Amazon EKS:

curl --silent --location "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /usr/local/bin

# Create an EKS cluster

eksctl create cluster --name my-cluster --region us-west-2 --nodes 3

3. Deploy on Google Kubernetes Engine (GKE):

curl https://sdk.cloud.google.com | bash

exec -l $SHELL

gcloud init

# Enable Kubernetes Engine API

gcloud services enable container.googleapis.com

# Create a GKE cluster

gcloud container clusters create my-cluster --num-nodes 3 --zone us-central1-a

4. Deploy on Azure Kubernetes Service (AKS):

curl -sL https://aka.ms/InstallAzureCLIDeb | sudo bash

# Create an AKS cluster

az group create --name myResourceGroup --location eastus

az aks create --resource-group myResourceGroup --name myAKSCluster --node-count 3 --enable-addons monitoring --generate-ssh-keys

az aks get-credentials --resource-group myResourceGroup --name myAKSCluster

4. 🔗 Setup Container Network Interface (CNI)

🖥️ On-Prem: Setup Container Network Interface (CNI)

- Set up Kubernetes on VPS, bare-metal servers, or dedicated machines.

- Use Cilium for advanced networking and WireGuard for secure communication between nodes.

Steps and Code Example:

1. Install Kubernetes with kubeadm:

sudo apt-get update && sudo apt-get install -y apt-transport-https curl

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee -a /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

2. Initialize the Kubernetes Control Plane

After initialization, set up kubeconfig for the current user:

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

3. Install Cilium CLI:

curl -L --remote-name https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz

tar xzvf cilium-linux-amd64.tar.gz

sudo mv cilium /usr/local/bin/

4. Deploy Cilium with WireGuard Support:

cilium install --encryption wireguard

This will deploy Cilium as the CNI (Container Network Interface) and enable WireGuard for encrypted node-to-node communication. Cilium automatically manages the WireGuard configurations.

5. Verify Cilium and WireGuard Deployment

cilium status

# Confirm that WireGuard is enabled

cilium status | grep Encryption

6. Join Worker Nodes

--token

--discovery-token-ca-cert-hash sha256:

☁️ Cloud: Setup Container Network Interface (CNI)

- Deploy clusters using DOKS, EKS, Azure, or GKE.

- Leverage cloud-native VPC networking for secure intra-cluster communication

5. 💾 Configure Persistent Storage

🖥️ On-Prem: Use Rook-Ceph for Scalable Storage

Rook-Ceph provides a scalable and highly available storage solution for on-prem Kubernetes clusters.

Steps and Code Example:

1. Install Rook-Ceph Operator:

kubectl create -f https://raw.githubusercontent.com/rook/rook/release-1.9/cluster/examples/kubernetes/ceph/operator.yaml

2. Deploy a Ceph Cluster:

3. Create a StorageClass:

kind: StorageClass

metadata:

name: rook-ceph-block

provisioner: rook-ceph.rbd.csi.ceph.com

parameters:

clusterID: rook-ceph

pool: replicapool

imageFeatures: layering

reclaimPolicy: Delete

allowVolumeExpansion: true

4. Use the StorageClass in a PVC: Create a PersistentVolumeClaim (PVC) for your application.

kind: PersistentVolumeClaim

metadata:

name: ceph-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: rook-ceph-block

☁️ Cloud: Use Provider-Specific Storage Solutions

Cloud providers offer managed storage options for persistent and object storage. Below are examples for AWS EBS, Azure Disk, and GCP Persistent Disks.

1. AWS EBS (Persistent Storage):

1. Create a StorageClass for EBS:

kind: StorageClass

metadata:

name: ebs-sc

provisioner: kubernetes.io/aws-ebs

parameters:

type: gp2

fsType: ext4

reclaimPolicy: Delete

2. Create a PVC Using EBS:

kind: PersistentVolumeClaim

metadata:

name: ebs-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: ebs-sc

2. Azure Disk (Persistent Storage):

1. Create a StorageClass for Azure Disk:

kind: StorageClass

metadata:

name: azure-disk-sc

provisioner: kubernetes.io/azure-disk

parameters:

storageaccounttype: Premium_LRS

reclaimPolicy: Delete

allowVolumeExpansion: true

2. Create a PVC Using Azure Disk:

kind: PersistentVolumeClaim

metadata:

name: azure-disk-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: azure-disk-sc

3. GCP Persistent Disk (Persistent Storage):

1.Create a StorageClass for GCP PD:

kind: StorageClass

metadata:

name: gcp-pd-sc

provisioner: kubernetes.io/gce-pd

parameters:

type: pd-ssd reclaimPolicy: Delete

allowVolumeExpansion: true

Apply the StorageClass:

2.Create a PVC Using GCP Persistent Disk:

kind: PersistentVolumeClaim

metadata:

name: gcp-pd-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: gcp-pd-sc

6. ⚖️ Set Up Load Balancing

Ensure reliable distribution of traffic across your Kubernetes nodes and services with tailored load-balancing solutions.

🖥️ On-Prem Solutions: Set Up Load Balancing

Deploy MetalLB to enable load balancing in bare-metal Kubernetes environments.

On-Premises: Deploy MetalLB

1.Install MetalLB:

2.Configure Layer 2 Mode:

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default-pool

protocol: layer2

addresses:

- 192.168.1.100-192.168.1.200

Apply the ConfigMap:

3.Create a Service Using MetalLB:

kind: Service

metadata:

name: my-service

namespace: default

spec:

type: LoadBalancer

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

☁️ Cloud Solutions: Set Up Load Balancing

Use cloud-native load balancer solutions provided by DOKS, EKS, Azure, or GKE.

1.Create a LoadBalancer Service:

kind: Service

metadata:

name: my-eks-service

annotations:

service.beta.kubernetes.io/aws-load-balancer-type: "nlb" # Use NLB

spec:

type: LoadBalancer

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

Apply the service:

2.Verify the Load Balancer:

Azure AKS Example:

1.Create a LoadBalancer Service:

kind: Service

metadata:

name: my-aks-service

annotations:

service.beta.kubernetes.io/azure-load-balancer-internal: "true" # Internal load balancer

spec:

type: LoadBalancer

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

2.Verify the Load Balancer: Check the external IP:

GCP GKE Example:

1.Create a LoadBalancer Service:

kind: Service

metadata:

name: my-gke-service

spec:

type: LoadBalancer

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

2.Verify the Load Balancer: Retrieve the external IP:

7. 🌐 Manage Traffic Routing

Effectively route external traffic to your Kubernetes services using robust ingress solutions.

🖥️ On-Prem Solutions: Manage Traffic Routing

Configure Nginx Ingress Controller for secure, scalable routing to Kubernetes services.

☁️ Cloud Solutions: Manage Traffic Routing

Use cloud-specific ingress solutions like AWS ALB Ingress Controller (EKS) or GKE Ingress for seamless integration.

8. 🔒 Automate Certificates and Secrets

✔️ Use Cert Manager:

- Automate lets encrypt TLS/SSL certificate issuance and renewal.

🔑 Store Secrets Securely:

- Use Kubernetes secrets to encrypt sensitive data such as API keys and database credentials.

9. ⛵ Simplify Deployment with Helm

Helm is a powerful Kubernetes package manager that allows you to package and distribute your application as a Helm Chart, making deployment globally consistent and automated

Steps to Package with Helm:

1. Create a Helm Chart:

Scaffold a new Helm chart by running:

The directory includes:

- Chart.yaml: Metadata about your chart.

- values.yaml: Default application configurations.

- templates/: Kubernetes manifests rendered using

values.yaml.

2. Customize Templates:

Modify templates/deployment.yaml to use your Docker image:

containers:

- name: your-app-container

image: your-dockerhub-username/your-app-name:latest

ports:

- containerPort: 3000

3. Package the Helm Chart:

Create a .tgz package for sharing or deployment:

4. Host the Chart for Global Use:

Publish the Helm chart to a Helm repository (e.g., GitHub Pages or Artifact Hub).

5. Deploy the Application Globally:

Install the chart on Kubernetes:

10. 📊 Monitoring, Maintenance and Management

Set up monitoring for your Kubernetes cluster to ensure performance and reliability using the following tools

🖥️ On-Prem: Monitoring, Maintenance and Management

Kubernetes Dashboard: Real-time cluster monitoring via a graphical interface.

☁️ Cloud: Monitoring, Maintenance and Management

Deploy the Kubernetes Dashboard for real-time cluster monitoring and efficient resource management.

Use cloud-native monitoring tools such as:

- AWS CloudWatch

- Azure Monitor

- GCP Operations Suite

Universal Monitoring Tools:

- Prometheus: Metrics collection and alerting.

- Grafana: Visualization and dashboards.

- Kube-state-metrics: Kubernetes-specific metrics.

- ELK Stack: Log monitoring (Elasticsearch, Logstash, Kibana).

- Loki: Lightweight log aggregation.

- Jaeger: Distributed tracing for microservices.

These tools provide robust monitoring and insights into your Kubernetes cluster.

11. 📈 Test Scalability and Reliability

- Perform load tests to ensure your SaaS application:

- Scales efficiently under increased demand.

- Meets redundancy and failover requirements for high availability.

12. 🔄 Plan for Continuous Deployment

Streamline your deployment process and improve reliability with these practices:

- Use CI/CD Pipelines:

Automate application deployments to minimize manual errors. - Adopt GitOps Tools:

Tools like Argo CD or Flux can manage Kubernetes configurations directly from your repository

13. 🖥️ Automate Helm Chart Deployments with Shell Scripts

Automate deployments to streamline processes and enhance reliability:

- Automate Helm chart installation, upgrades, and rollbacks.

- Manage namespaces, configurations, and persistent storage setups

Example Script:

echo "Deploying Application Chart..."

helm upgrade --install my-app ./charts/my-app --namespace my-namespace -f values.yaml

echo "Deployment Completed!"

14. 🐍 Invoke Shell Scripts Programmatically

Automate deployments to streamline processes and enhance reliability:

- Automate Helm chart installation, upgrades, and rollbacks.

- Manage namespaces, configurations, and persistent storage setups

Example Script:

def deploy_application():

subprocess.run(["bash", "./deploy-app.sh"])

print("Deployment Triggered!")

deploy_application()

15. 🔥 Automate Deployment via Your SaaS Billing Solution

Use your SaaS billing solution to:

- Automatically provision Kubernetes resources for new customers.

- Trigger Helm deployments directly after a successful subscription.

Workflow Example:

- User subscribes → SaaS system provisions Kubernetes namespace → Deploys Helm chart using the automated script.

0 Comments